Instance-level Context Attention Network for instance segmentation

Image credit: Unsplash

Image credit: Unsplash

Abstract

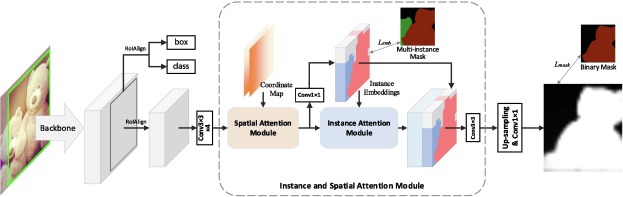

Instance segmentation has made great progress in recent years. However, current mainstream detection-based methods ignore the process of distinguishing different instances in the same detected region, making it hard to segment the correct instance when a detected region contains multiple instances. To address this problem, we propose an Instance-level Context Attention Network (ICANet) to generate more discriminative features for different instances based on the context from their respective instance scopes, called instance-level context. Specifically, given a detected region, we first propose an instance attention module to obtain the specific attention maps that focus on the relationships between pixel pairs from the same instance by learning an embedding space. With this type of attention map, the features from the same instance achieve mutual gains, while the features from different instances become more discriminative. Then, we propose a spatial attention module to incorporate spatial information and enhance feature representations based on both feature and spatial relations. Moreover, to obtain clearer attention maps, we further propose a weight clipping strategy to filter out the noise by cutting off the lower weight. We perform extensive experiments to verify the effectiveness of the proposed method. As reported in the results, our method steadily outperforms the baseline by over 1.5% on the COCO dataset using different backbones and 3.7% on the Cityscapes dataset, which demonstrates the effectiveness of our method.